All three components will be in production this time next year

OK we will see then how good they are.

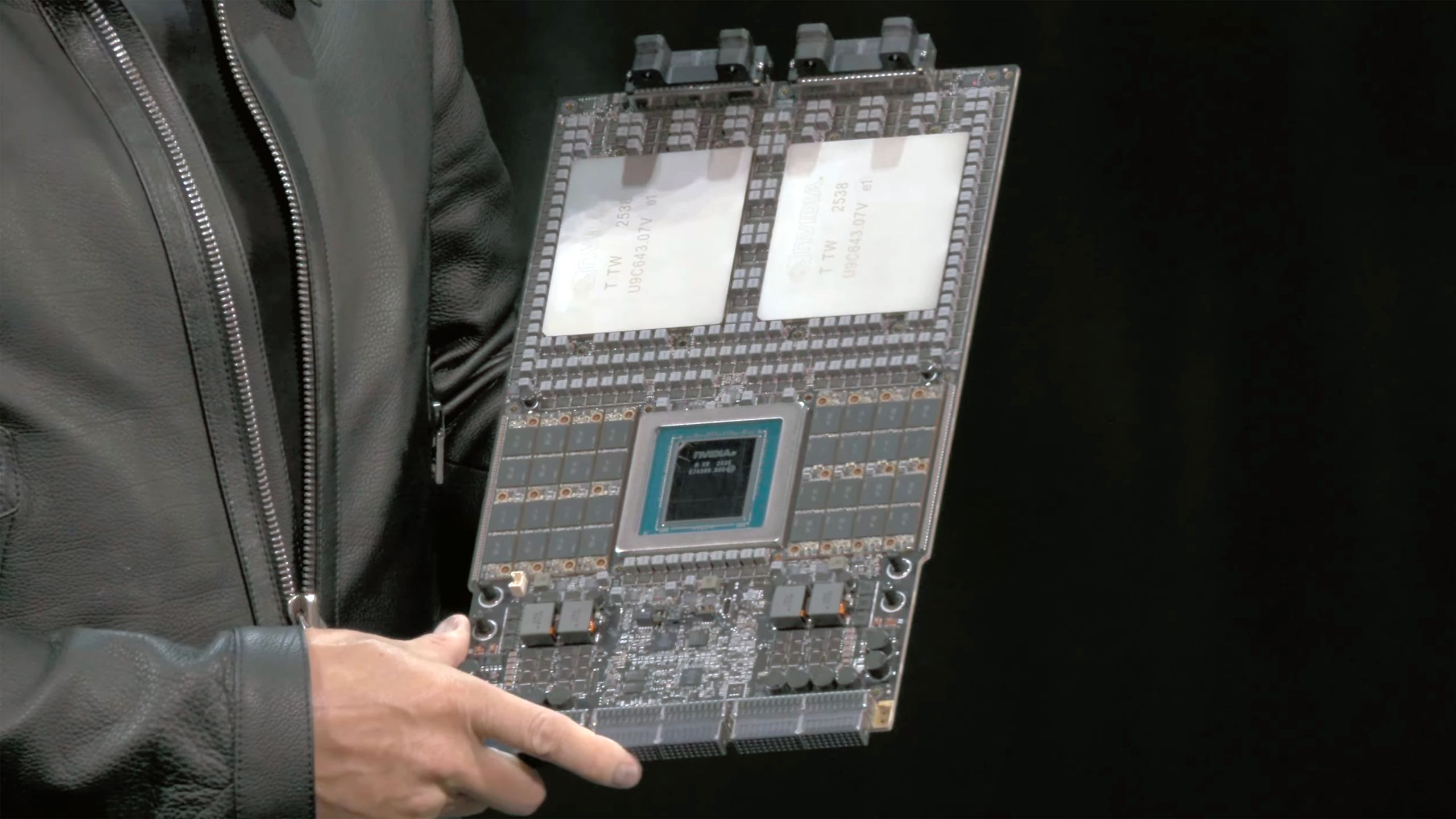

Pretty awful pictures, hard to see if the monster in the center of the board is a single chip or chiplet design? But it looks like a single chip, so guaranteed to be insanely expensive to make.Let’s hope AMD or others can really break into the compute market with some more competitive products.

It’s definitely chiplet, as the article points out. You can see the seams.

14 GB of vRAM?

Finally, I can get good frame rate in Monster Hunter: Wilds

Sorry, no OpenGL support.

Kinda odd. 8 GPUs to a CPU is pretty much standard, and less ‘wasteful,’ as the CPU ideally shouldn’t do much for ML workloads.

Even wasted CPU aside, you generally want 8 GPUs to a pod for inference, so you can batch a model as much a possible without physically going ‘outside’ the server. It makes me wonder if they just can’t put as much PCIe/NVLink on it as AMD can?

LPCAMM is sick though. So is the sheer compactness of this thing; I bet HPC folks will love it.