I think a substantial part of the problem is the employee turnover rates in the industry. It seems to be just accepted that everyone is going to jump to another company every couple years (usually due to companies not giving adequate raises). This leads to a situation where, consciously or subconsciously, noone really gives a shit about the product. Everyone does their job (and only their job, not a hint of anything extra), but they’re not going to take on major long term projects, because they’re already one foot out the door, looking for the next job. Shitty middle management of course drastically exacerbates the issue.

I think that’s why there’s a lot of open source software that’s better than the corporate stuff. Half the time it’s just one person working on it, but they actually give a shit.

The article is very much off point.

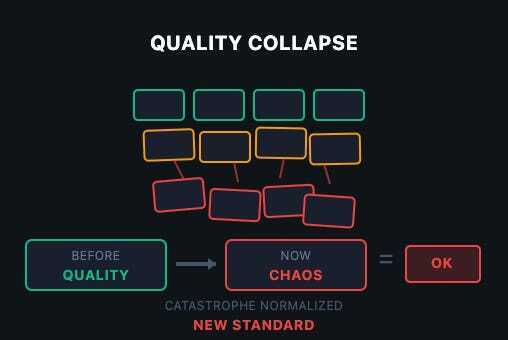

- Software quality wasn’t great in 2018 and then suddenly declined. Software quality has been as shit as legally possible since the dawn of (programming) time.

- The software crisis has never ended. It has only been increasing in severity.

- Ever since we have been trying to squeeze more programming performance out of software developers at the cost of performance.

The main issue is the software crisis: Hardware performance follows moore’s law, developer performance is mostly constant.

If the memory of your computer is counted in bytes without a SI-prefix and your CPU has maybe a dozen or two instructions, then it’s possible for a single human being to comprehend everything the computer is doing and to program it very close to optimally.

The same is not possible if your computer has subsystems upon subsystems and even the keyboard controller has more power and complexity than the whole apollo programs combined.

So to program exponentially more complex systems we would need exponentially more software developer budget. But since it’s really hard to scale software developers exponentially, we’ve been trying to use abstraction layers to hide complexity, to share and re-use work (no need for everyone to re-invent the templating engine) and to have clear boundries that allow for better cooperation.

That was the case way before electron already. Compiled languages started the trend, languages like Java or C# deepened it, and using modern middleware and frameworks just increased it.

OOP complains about the chain “React → Electron → Chromium → Docker → Kubernetes → VM → managed DB → API gateways”. But he doesn’t even consider that even if you run “straight on bare metal” there’s a whole stack of abstractions in between your code and the execution. Every major component inside a PC nowadays runs its own separate dedicated OS that neither the end user nor the developer of ordinary software ever sees.

But the main issue always reverts back to the software crisis. If we had infinite developer resources we could write optimal software. But we don’t so we can’t and thus we put in abstraction layers to improve ease of use for the developers, because otherwise we would never ship anything.

If you want to complain, complain to the mangers who don’t allocate enough resources and to the investors who don’t want to dump millions into the development of simple programs. And to the customers who aren’t ok with simple things but who want modern cutting edge everything in their programs.

In the end it’s sadly really the case: Memory and performance gets cheaper in an exponential fashion, while developers are still mere humans and their performance stays largely constant.

So which of these two values SHOULD we optimize for?

The real problem in regards to software quality is not abstraction layers but “business agile” (as in “business doesn’t need to make any long term plans but can cancel or change anything at any time”) and lack of QA budget.

Yeah what I hate that agile way of dealing with things. Business wants prototypes ASAP but if one is actually deemed useful, you have no budget to productisize it which means that if you don’t want to take all the blame for a crappy app, you have to invest heavily in all of the prototypes. Prototypes who are called next gen project, but gets cancelled nine times out of ten 🤷🏻♀️. Make it make sense.

This. Prototypes should never be taken as the basis of a product, that’s why you make them. To make mistakes in a cheap, discardible format, so that you don’t make these mistake when making the actual product. I can’t remember a single time though that this was what actually happened.

They just label the prototype an MVP and suddenly it’s the basis of a new 20 year run time project.

In my current job, they keep switching around everything all the time. Got a new product, super urgent, super high-profile, highest priority, crunch time to get it out in time, and two weeks before launch it gets cancelled without further information. Because we are agile.

The software crysis has never ended

MAXIMUM ARMOR

THANK YOU.

I migrated services from LXC to kubernetes. One of these services has been exhibiting concerning memory footprint issues. Everyone immediately went “REEEEEEEE KUBERNETES BAD EVERYTHING WAS FINE BEFORE WHAT IS ALL THIS ABSTRACTION >:(((((”.

I just spent three months doing optimization work. For memory/resource leaks in that old C codebase. Kubernetes didn’t have fuck-all to do with any of those (which is obvious to literally anyone who has any clue how containerization works under the hood). The codebase just had very old-fashioned manual memory management leaks as well as a weird interaction between jemalloc and RHEL’s default kernel settings.

The only reason I spent all that time optimizing and we aren’t just throwing more RAM at the problem? Due to incredible levels of incompetence business-side I’ll spare you the details of, our 30 day growth predictions have error bars so many orders of magnitude wide that we are stuck in a stupid loop of “won’t order hardware we probably won’t need but if we do get a best-case user influx the lead time on new hardware is too long to get you the RAM we need”. Basically the virtual price of RAM is super high because the suits keep pinky-promising that we’ll get a bunch of users soon but are also constantly wrong about that.

Software has a serious “one more lane will fix traffic” problem.

Don’t give programmers better hardware or else they will write worse software. End of.

This is very true. You don’t need a bigger database server, you need an index on that table you query all the time that’s doing full table scans.

Or sharding on a particular column

Accept that quality matters more than velocity. Ship slower, ship working. The cost of fixing production disasters dwarfs the cost of proper development.

This has been a struggle my entire career. Sometimes, the company listens. Sometimes they don’t. It’s a worthwhile fight but it is a systemic problem caused by management and short-term profit-seeking over healthy business growth

“Apparently there’s never the money to do it right, but somehow there’s always the money to do it twice.”

Management never likes to have this brought to their attention, especially in a Told You So tone of voice. One thinks if this bothered pointy-haired types so much, maybe they could learn from their mistakes once in a while.

We’ll just set up another retrospective meeting and have a lessons learned.

Then we won’t change anything based off the findings of the retro and lessons learned.

Post-mortems always seemed like a waste of time to me, because nobody ever went back and read that particular confluence page (especially me executives who made the same mistake again)

Post mortems are for, “Remember when we saw something similar before? What happened and how did we handle it?”

Twice? Shiiiii

Amateur numbers, lol

There’s levels to it. True quality isn’t worth it, absolute garbage costs a lot though. Some level that mostly works is the sweet spot.

“AI just weaponized existing incompetence.”

Daamn. Harsh but hard to argue with.

Weaponized? Probably not. Amplified? ABSOLUTELY!

It’s like taping a knife to a crab. Redundant and clumsy, yet strangely intimidating

Love that video. Although it wasn’t taped on. The crab was full on about to stab a mofo

Yeah, crabby boi fully had stabbin’ on his mind.

Non-technical hiring managers are a bane for developers (and probably bad for any company). Just saying.

Quality in this economy ? We need to fire some people to cut costs and use telemetry to make sure everyone that’s left uses AI to pay AI companies because our investors demand it because they invested all their money in AI and they see no return.

They mainly show what’s possible if you

- don’t have a deadline

- don’t have business constantly pivoting what the project should be like, often last minute

- don’t have to pass security testing

- don’t have customers who constantly demand something else

- don’t have constantly shifting priorities

- don’t have tight budget restrictions where you have to be accountable to business for every single hour of work

- don’t have to maintain the project for 15-20 years

- don’t have a large project scope at all

- don’t have a few dozen people working on it, spread over multiple teams or even multiple clusters

- don’t have non-technical staff dictating technical implementations

- don’t have to chase the buzzword of the day (e.g. Blockchain or AI)

- don’t have to work on some useless project that mostly exists for political reasons

- can work on the product as long as you want, when you want and do whatever you want while working at it

Comparing hobby work that people do for fun with professional software and pinning the whole difference on skill is missing the point.

The same developer might produce an amazing 64k demo in their spare time while building mass-produced garbage-level software at work. Because at work you aren’t doing what you want (or even what you can) but what you are ordered to.

In most setups, if you deliver something that wasn’t asked for (even if it might be better) will land you in trouble if you do it repeatedly.

In my spare time I made the Fairberry smartphone keyboard attachment and now I am working on the PEPit physiotherapy game console, so that chronically ill kids can have fun while doing their mindnumbingly monotonous daily physiotherapy routine.

These are projects that dozens of people are using in their daily life.

In my day job I am a glorified code monkey keeping the backend service for some customer loyalty app running. Hardly impressive.

If an app is buggy, it’s almost always bad management decisions, not low developer skill.

Accurate but ironically written by chatgpt

And you can’t even zoom into the images on mobile. Maybe it’s harder than they think if they can’t even pick their blogging site without bugs

Don’t give clicks to substack blogs. Fucking Nazi enablers.

Yeah, my favorite is when they figure out what features people are willing to pay for and then paywal everything that makes an app useful.

And after they monetize that fully and realize that the money is not endless, they switch to a subscription model. So that they can have you pay for your depreciating crappy software forever.

But at least you know it kind of works while you’re paying for it. It takes way too much effort to find some other unknown piece of software for the same function, and it is usually performs worse than what you had until the developers figure out how to make the features work again before putting it behind a paywall and subscription model again again.

But along the way, everyone gets to be miserable from the users to the developers and the project managers. Everyone except of course, the shareholders Because they get to make money, no matter how crappy their product, which they don’t use anyway, becomes.

A great recent example of this is Plex. It used to be open source and free, then it got more popular and started developing other features, and I asked people to pay reasonable amount for them.

After it got more popular and easy to use and set up, they started jacking up the prices, removing features and forcing people to buy subscriptions.

Your alternative now is to go back to a less fully featured more difficult to set up but open source alternative and something like Jellyfin. Except that most people won’t know how to set it up, there are way less devices and TVs will support their software, and you can’t get it to work easily for your technologically illiterate family and or friends.

So again, Your choices are stay with a crappy commercialized money-grubbing subscription based product that at least works and is fully featured for now until they decide to stop. Or, get a new, less developed, more difficult to set up, highly technical, and less supported product that’s open source and hope that it doesn’t fall into the same pitfalls as its user base and popularity grows.

I’m glad that they added CloudStrike into that article, because it adds a whole extra level of incompetency in the software field. CS as a whole should have never happens in the first place if Microsoft properly enforced their stance they claim they had regarding driver security and the kernel.

The entire reason CS was able to create that systematic failure was because they were(still are?) abusing the system MS has in place to be able to sign kernel level drivers. The process dodges MS review for the driver by using a standalone driver that then live patches instead of requiring every update to be reviewed and certified. This type of system allowed for a live update that directly modified the kernel via the already certified driver. Remote injection of un-certified code should never have been allowed to be injected into a secure location in the first place. It was a failure on every level for both MS and CS.

Being obtuse for a moment, let me just say: build it right!

That means minimalism! No architecture astronauts! No unnecessary abstraction! No premature optimisation!

Lean on opinionated frameworks so as to focus on coding the business rules!

And for the love of all that is holy, have your developers sit next to the people that will be using the software!

All of this will inherently reduce runaway algorithmic complexity, prevent the sort of artisanal work that causes leakiness, and speed up your code.

I don’t trust some of the numbers in this article.

Microsoft Teams: 100% CPU usage on 32GB machines

I’m literally sitting here right now on a Teams call (I’ve already contributed what I needed to), looking at my CPU usage, which is staying in the 4.6% to 7.3% CPU range.

Is that still too high? Probably. Have I seen it hit 100% CPU usage? Yes, rarely (but that’s usually a sign of a deeper issue).

Maybe the author is going with worst case scenario. But in that case he should probably qualify the examples more.

Naah bro, teams is trash resource hog. What you are saying is essentially ‘it works on my computer’.

nonsense, software has always been crap, we just have more resources

the only significant progress will be made with rust and further formal enhancements

I’m sure someone will use rust to build a bloated reactive declarative dynamic UI framework, that wastes cycles, eats memory, and is inscrutable to debug.